How businesses can measure AI success with KPIs

AI KPIs should include direct and indirect metrics. Learn about the standard and generative AI-specific KPIs that will help you gauge the success of your AI projects.

To measure the success of AI projects, particularly generative AI, organizations should establish key performance indicators, or KPIs, to improve project efficiency and deliver meaningful societal benefits. Generative AI is distinct in its ability to produce content -- whether text, images or other forms of media -- based on training data, and this requires specific metrics for success evaluation.

Traditional AI implementations involve machine learning to build foundational models, algorithms and training methods. Generative AI follows similar processes, but also necessitates creativity-based KPIs, which are different from KPIs used to evaluate typical predictive AI models. After training, developers measure generative models by comparing the output against benchmarks for creativity, relevance or diversity, depending on the application.

Measuring AI success is challenging for generative AI as subjective elements are frequently involved. Therefore, both measurable output (objective) and human evaluative feedback (subjective) are necessary to assess generative AI's performance. Choosing the right tools for this, with real-world data that closely mirrors production environments, is essential.

Defining AI KPIs

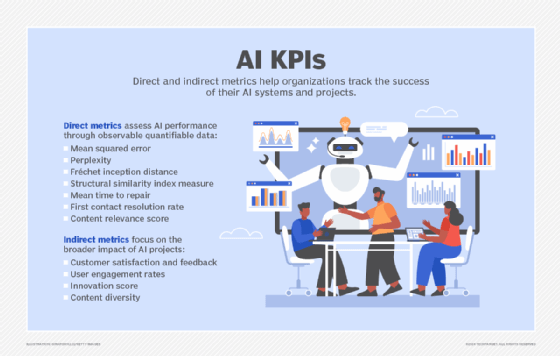

AI KPIs can be divided into two types: direct metrics and indirect metrics.

Direct metrics

A critical direct metric in machine learning, including generative AI, is mean squared error. This measures the variance between the generated output and the intended result, helping to quantify errors in training.

This article is part of

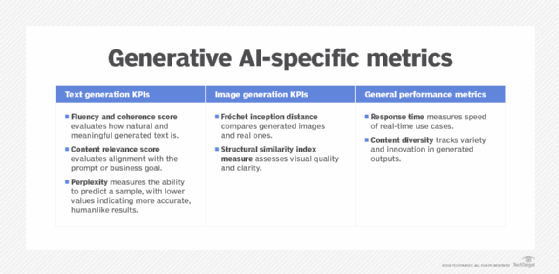

A guide to artificial intelligence in the enterprise

Another common metric is perplexity, particularly for language-based generative AI models. Perplexity measures how well a language model predicts a sample and indicates the accuracy of the generated content. Lower perplexity suggests the model is better at producing humanlike text.

For generative AI applications that produce media or images, the Fréchet inception distance can be a helpful metric. Developed in 2017, FID measures the quality of generated images by comparing them with real ones, focusing on their similarity to human-created images.

Other image-based metrics might include the structural similarity index measure, which was developed in 2004 for the motion picture industry and assesses the perceived quality of generated images compared with original data.

Existing KPIs that have business and IT relevance also apply to AI projects, including the following:

- Mean time to repair measures how quickly issues can be addressed.

- First contact resolution rate gauges what percentage of problems are resolved at the first level of support without escalation.

- Content relevance score, for text-based models, can serve as a key indicator of how closely the generated content matches business or creative needs.

Indirect metrics

Indirect metrics are equally important, especially for generative AI, where the important but subjective measures of creativity and user satisfaction play significant roles. These metrics, which derive from direct metrics, focus on broader impacts:

- Customer satisfaction and feedback. In generative AI used for customer-facing applications like chatbots, human feedback can help evaluate how well the AI serves its intended purpose.

- User engagement rates. For applications that generate creative outputs like text, art or music, user engagement or interaction with generated content serves as a strong indirect metric.

- Innovation score. This measures how frequently the generative AI comes up with novel, useful ideas or creative outputs that meet specific innovation goals.

- Content diversity. This metric evaluates the ability of a generative AI system to produce varied, high-quality outputs across different contexts or domains.

While indirect metrics are important, they should not be the sole measure of an AI system's impact. They must be backed by direct, observable metrics to ensure both quantitative and qualitative success.

How KPIs measure AI success

AI-related KPIs -- whether direct or indirect -- help organizations measure success by quantifying return on investment and operational efficiency. For generative AI, ROI can be measured in terms of creativity, time saved in content creation, user satisfaction and accuracy in generating outputs that align with business or user needs.

ROI in generative AI is also about scalability -- that is, how many outputs can be generated in a given time period while maintaining quality. For example, an organization might invest in generative AI to automate the creation of marketing materials. If the AI can reduce human design time by 50%, that becomes a tangible measure of ROI.

An additional example would be a business that uses generative AI to enhance customer experience. By reducing the time required to generate personalized responses in real-time chat support by 30%, the organization could save significant costs in labor and enhance user engagement, contributing to both time-based ROI and customer retention.

KPIs enable companies to quantify the success of AI by focusing on the measurable, observable outputs first, then evaluating indirect benefits like customer satisfaction or creativity. When applied effectively, these KPIs help track not only technical performance, but also the real-world impact of AI and generative AI systems.

Jerald Murphy is senior vice president of research and consulting with Nemertes Research. With more than three decades of technology experience, Murphy has worked on a range of technology topics, including neural networking research, integrated circuit design, computer programming and global data center design. He was also the CEO of a managed services company.